Long|AI ASIC part II: AWS, Marvell, Alchip, Microsoft, and Meta

Overview of Major CSP’s ASICs and Their Attempts to Break Free from the NVIDIA Ecosystem

We will provide a detailed analysis of the ASIC businesses of AWS, Marvell, Alchip, Microsoft, and Meta, as well as the elasticity of their revenue and profit performance.

AWS

Key Insights:

a. Chip Iteration:

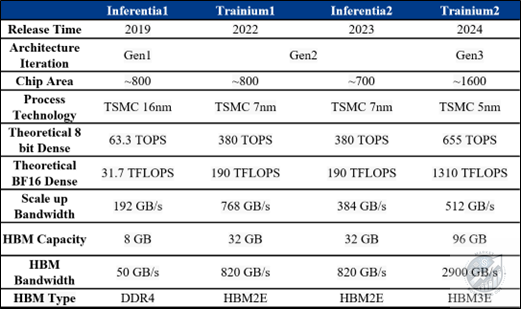

Released Inferentia1, Inferentia2, and Trainium1 chips. Inferentia2 and Trainium1 share similar architectures, but Trainium1's NeuronLink-v2 interconnect ports for inference are only half those of Trainium1. Additionally, architectural issues causing compute-bound operations lead to slow prefill.

Trainium2, featuring high bandwidth and large memory, could be pivotal for AWS’s ASIC.

b. ASIC Chip Business:

Demand from AWS Cloud Services: GPU types and proportions are largely influenced by customer choices, with Nvidia and AMD GPUs maintaining dominance. To reduce high GPU procurement costs and address supply chain challenges, AWS will continue developing its own chips.

Collaboration with Anthropic: Amazon has invested $4 billion in Anthropic, enabling model training on the AWS platform and providing long-term model capabilities to AWS developers and cloud customers. Currently, Anthropic's largest models have not been trained on AWS chips. Anthropic is also committed to helping AWS improve chip design and ecosystem development.

Order Allocation: Trainium2 will be produced by Marvell, Trainium3 is under negotiation, and Inferentia3 will be manufactured by Alchip (世芯电子). Our analysis shows Alchip could be the ultimate winner in the ASIC play in terms of stock price upside.

Table1: AWS self-developed chips are divided into Inferentia chips for inference and Trainium chips for training

Overview of AWS's In-House ASIC Development:

Trainium2: AWS's third-generation architecture significantly increases bandwidth from over 700GB to 2000GB, enhancing processing power. The NeuronCore architecture utilizes a GPU-like expansion structure and employs a TORUS architecture to build larger switch clusters, reducing dependency on switches. This demonstrates AWS's strong hardware foundation, though software and deployment may still lag. Due to architectural differences from GPUs, third-party software support might be limited.

Scalability and Ecosystem Challenges: While AWS uses the TORUS architecture for scalability, differences from GPU architectures may impact third-party software performance. Complex customer demands pose challenges for achieving high robustness and comprehensiveness. Currently, Trainium chips account for less than 10% of inference tasks, expected to reach 50-60% by 2030. AWS chose TORUS primarily for network scalability in data centers, enabling larger network connections without NV switches by directly linking multiple chips, maximizing cost-efficiency and network performance. TORUS offers a more efficient networking solution as AWS lacks an NV switch alternative and cannot develop suitable switch devices in the short term.

Chip Demand: Most of Trainium2's production will be allocated for Anthropic's use, with external demand limited. Of the expected 55,000 to 60,000 units, approximately 80% will be for internal use.

CUDA-like System Development: If models are controlled by only three companies, CUDA might be unnecessary, allowing developers to use custom operators for optimal efficiency. However, evolving models could increase software ecosystem barriers. While CUDA currently offers strong development tools and frameworks, changes in models may challenge its ecosystem advantages. CUDA remains strong in operator development and inference optimization, but flexibility and scalability are crucial for evolving models.

Outlook on AWS Supply Chain and Partnerships:

Production Partners: Marvell has started producing Inferentia2 and related chips. Trainium3 production is expected to scale up by 2026, with negotiations primarily focused on margins between Marvell and AWS. Alchip (世芯电子) is also vying for production, with the Taiwanese supply chain favoring Alchip. However, if AWS needs to independently develop these SerDes, it introduces uncertainty.

Potential Collaboration with Marvell: AWS may continue partnering with Marvell for Trainium3 production within the next 2-3 months, with negotiation differences mainly around pricing and resource investment. AWS believes its chips have AI advantages, especially in CPU and network card integration, with both first and second-generation AI chips designed in-house. AWS chose Marvell for this collaboration due to higher bandwidth and memory requirements of new chips, necessitating additional resource support. This strategic move aims to enhance chip performance and bandwidth by leveraging Marvell's capabilities alongside AWS's increased manpower to address technical gaps.

Competitive Pricing Strategy: Despite challenges in developing AI chips, AWS's extensive customer base and position as the largest general-purpose computing platform could offer competitive pricing. AWS might price its chips at half or below Nvidia's, achieving a cost-performance advantage.

Strategic Investment with Anthropic: AWS's $4 billion investment in Anthropic to complete model training on the AWS platform and provide model capabilities to developers and customers highlights a significant strategic commitment. Anthropic's smaller models can be trained, fine-tuned, and inferred on the platform, while larger models still rely on GPUs. If AWS can scale and apply these models effectively by 2025, it could positively impact their business.

MRVL

Key Insights

a. Marvell ASIC Business Analysis:

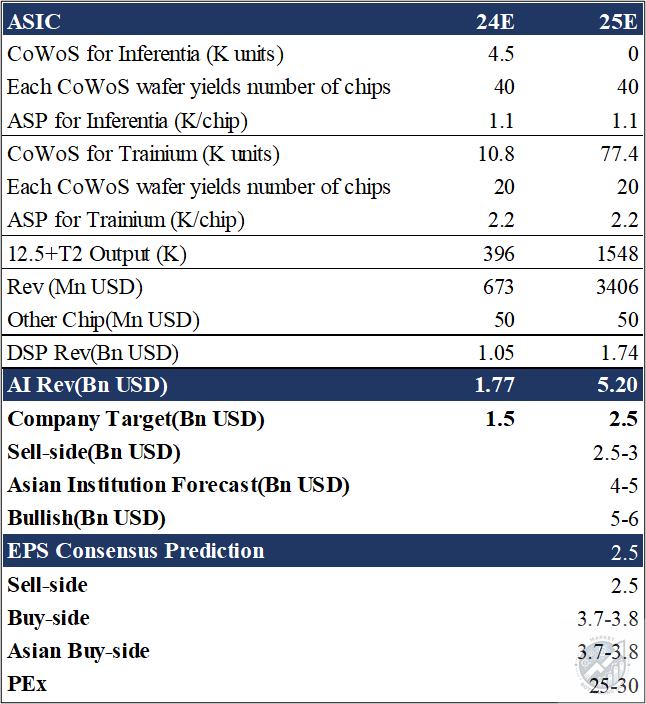

We project Marvell’s AI revenue to reach approximately USD 5 billion by 2025, corresponding to a non-GAAP EPS of around 3.2. Current market expectations are relatively aggressive. In the short term, stock price catalysts include management potentially raising AI revenue guidance in upcoming earnings reports, though the long-term rationale remains unclear.

Before 2019, Marvell did not have a significant ASIC business. This segment was established through the acquisition of Avera from GlobalFoundries for USD 650 million. Broadcom is currently the undisputed industry leader in ASICs, particularly excelling in SerDes capabilities, which command the highest fees. Compared to Broadcom, Marvell's overall technical capabilities lag, but it has a pricing advantage.

From a stock price perspective, Marvell’s ASIC business in 2025 has substantial elasticity. The market holds aggressive expectations for ASIC revenue to exceed USD 5 billion, translating to an EPS of around 3.7-3.8.

However, it is understood that Alchip (世芯电子) will produce Trainium3 instead of Marvell, primarily due to margins. The market currently leans towards Alchip potentially securing the Trainium3 chips. Although there are rumors that Marvell might abandon Trainium3 to partner with Microsoft, Microsoft’s ASICs are less mature than AWS’s. Therefore, uncertainty remains for Marvell in 2026.

Marvell Financial Overview:

Q3 Performance: Marvell’s Q3 results exceeded expectations, and Q4 guidance also surpassed consensus by approximately USD 200 million. The key focus during the conference was AI revenue. Last quarter, management stated that AI revenue would "exceed" official expectations, and this quarter they adjusted to "significantly exceed." Overall, the market generally believes management has been conservative in revenue projections.

Before the earnings release, management held multiple private discussions with investors, hinting that next year’s AI revenue could contribute around USD 4-5 billion, though this was not specified during the earnings call. They reiterated that the total AI market is about USD 40 billion, with Marvell aiming for a 20% market share, targeting USD 8 billion in revenue from this segment. If 2025 progresses smoothly, ASIC chip revenue could conservatively reach USD 3 billion, with future revenues potentially doubling.

Additionally, before the earnings release, Marvell announced a 5-year extension of its partnership with AWS, though specifics about the ASIC chips involved were not disclosed. Thus, this aspect remains uncertain, and Trainium3 negotiations are ongoing, mainly focusing on margins, with initial resolutions expected in the next couple of months.

Collaboration with Microsoft: Marvell emphasized that its partnership with Microsoft is progressing smoothly, anticipating Microsoft to become one of its largest future clients, contributing more revenue than AWS currently does.

Marvell 2025 Projections

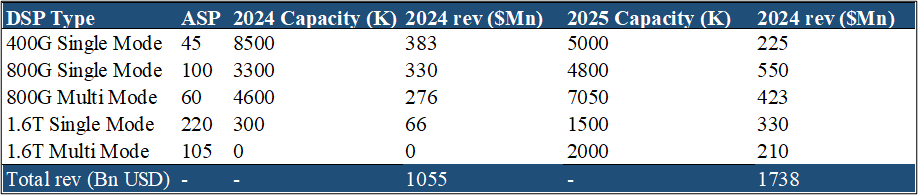

Our estimates focus on DSP and ASIC segments. For DSP, using an aggressive approach, we expect revenue to approach USD 1.7 billion in 2025. Combining this with the ASIC business, assuming a shipment volume of 55,000 units, each containing 15 chips, and considering Trainium3 is a dual-die chip with a 90% yield, the total expected shipment next year is approximately 740,000 chips.

Table2: MRVL 2024 and 2025 DSP Module Shipment Forecast

Limited Inferentia2 Chips shipment are expected, primarily from Trainium2 chips. Overall ASIC business will see significant growth. In AI chips, Marvell mainly provides the CPU part, contributing minimally—around USD 50 million this year, remaining unchanged next year. Therefore, AI revenue is expected to be in the range of USD 5.2 billion, compared to the company’s official forecast of USD 2.5 billion. Management described next year’s AI revenue as “significantly exceeding” expectations during the earnings call. Consequently, the market’s Sell-side revenue estimates range from USD 2.5 billion to USD 3.1 billion, with potential for further upward revisions. In smaller meetings, management mentioned AI revenue could reach USD 4-5 billion, with some aggressive analysts estimating USD 5-6 billion. EPS expectations from the buy-side are estimated between 3.7 to 3.8. Thus, the market generally believes that although short-term logic is hard to verify, if the stock price reaches NT$110-120, investors might be reluctant to hold long-term, especially with significant uncertainties looming in 2026.

Table3: MRVL 2024 and 2025 AI Rev & EPS Prediction

Multiple private meetings in Taiwan express optimism about AWS’s future 3nm chip progress and order allocations. Marvell maintains high gross margins primarily due to high margins in ASIC design and production, and its comprehensive service system. Without these value-added services, Marvell’s margins might align with other backend suppliers. Currently, there is no significant difference between Broadcom and Marvell in DSP, mainly differing in pricing. Broadcom often bundles DSP with other products, potentially affecting pricing, whereas Marvell’s prices may be 10-20% higher. Historically, Marvell held a substantial DSP market share, but next year could see changes as NVIDIA enters the DSP market and Broadcom aims to compete with Marvell in the 1.6T segment. Consequently, Marvell’s DSP market share is expected to decline, though the extent depends on specific market conditions.

Taiwan Competitor - Alchip Technologies (世芯电子)

Alchip Technologies, Ltd. was founded in 2003 and is headquartered in Taipei, Taiwan. The company specializes in providing high-complexity, high-volume Application-Specific Integrated Circuits (ASICs) for system companies, with products widely used in Artificial Intelligence (AI) and High-Performance Computing (HPC) sectors. As of December 18, 2024, Alchip’s stock price stands at NT$3,340 per share. We believe there is significant upside from our following analysis. In our 2026 base case, the EPS could be 243 NTD. For bull case (assuming Trainium 3 could be the TPU4 moment for AWS, which is quite likely as Trainium 3 is indeed the 4th generation ASIC for AWS), the EPS could be 396 NTD. Assuming 20X PE, the stock price could be 4,858 NTD and 7,927 NTD, respectively.

Below is our calculation process.

Keep reading with a 7-day free trial

Subscribe to FundamentalBottom to keep reading this post and get 7 days of free access to the full post archives.