Research|New Optical Opportunities Resulting from Changes in Emerging Models such as O1 and O3

CPO Is a Game Changer, While OIO Holds Greater Long-Term Promise

New Connectivity Requirements Driven by Models

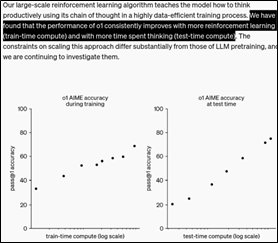

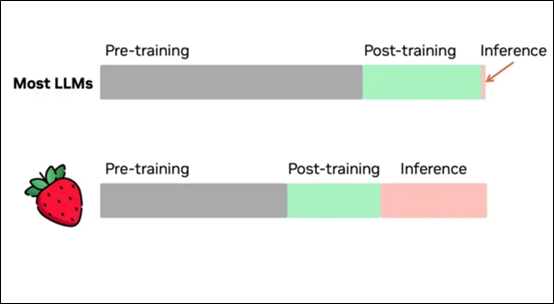

With OpenAI's release of the new O1 and O3 models, along with the diversified computational demands of the Orion model, the focus for computing resources has expanded beyond training to include post-training and inference. In particular, the post-training phase has shifted from the relatively lighter demands of SFT+RLHF to the more resource-intensive RL+CoT combined with synthetic data processing, requiring substantially greater computational power. This shift has significantly amplified the overall demand for computing resources.

Model Training Phase

The current application of Reinforcement Learning (RL) in model training has driven a sharp rise in computational demand. Unlike the previous generation of human-machine adversarial models, today’s AI-to-AI adversarial interactions have significantly escalated resource requirements. Post-training computational demands now surpass those of the pre-training phase, posing new challenges for existing computing solutions. Additionally, extended RL models, designed to push performance boundaries, demand even greater computational power. As a result, the need for computing resources continues to grow exponentially, with no clear upper limit in sight. Experimental setups frequently require tens of thousands of GPUs, placing unprecedented demands on cluster architecture and design.

Figure1: o1 performance smoothly improves with both train-timeand test-time compute

Figure2: RL model requires longer context window lengths

Figure3: Post-training & Inference require more computational power

Inference Phase

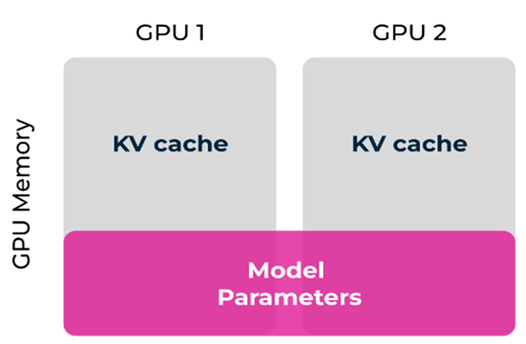

In the inference phase, the effectiveness of Chain-of-Thought (COT) reasoning is heavily influenced by the length and quality of the context. As a result, handling longer contexts and more complex reasoning tasks has become a critical capability. This is evidenced by a significant growth in the size of KV caches. Multi-GPU inference further complicates this by introducing latency challenges—distributing model parameters across GPUs leads to even larger KV caches.

Additionally, as model sizes continue to grow (from hundreds of billions to trillions of parameters, with future models potentially reaching tens of trillions), a single GPU is no longer sufficient to host an entire model. This necessitates model partitioning and parallel processing, which introduces additional communication latency. These challenges drive the need for increasingly complex cluster architectures, requiring both scale-up and scale-out strategies to achieve high-performance inference.

For example, in a two-GPU setup, half of the model parameters might be stored on one GPU, while the other half resides on the second GPU, illustrating the complexities of multi-GPU inference.

Figure4: Visualization of multi-GPU inference in GPU memory

Figure5:Model Paralleism

GPU Cluster Development Trends

Clusters are evolving through a combination of scale-up and scale-out strategies. Scale-up expands individual nodes to larger configurations, such as 72 GPUs per cabinet, making it ideal for model parallelism in scenarios with high communication demands. Scale-out, on the other hand, involves scaling across multiple nodes using interconnect technologies like InfiniBand or Ethernet, making it better suited for data parallelism, where large datasets or complex inference tasks are involved.

Currently, large-scale clusters, such as XAI's 100,000 GPUs and Meta's tens of thousands of GPUs, focus on optimizing GPU cluster configurations to maximize performance and meet the training requirements of next-generation models and customized customer solutions. Looking ahead, as models grow in scale and complexity, the demands on interconnect technologies will intensify, requiring even more robust and efficient communication solutions.

NVIDIA's Short-Term Advantages

For NVIDIA, interconnect limitations are turning into strategic advantages as the long-term demand for computing power surges. In the short term, barriers in both software and hardware architectures are being reinforced. Addressing the computational demands of next-generation models will require a multi-faceted approach to scaling and technological innovation.

Currently, NVIDIA’s hardware strengths are centered on NVLink and NV72 cabinet products leveraging NVLink technology. With ongoing advancements in optical interconnect technologies, NVIDIA is well-positioned to further widen the gap with its competitors. In scale-out interconnect technologies, NVIDIA holds a significant edge: NVLink and NVSwitch 4.0 double the inter-GPU bandwidth, enhancing interconnect performance. For instance, NVSwitch 5.0 delivers interconnect speeds of 1.8 TB/s, compared to AMD’s competing products, which offer only half the bandwidth and lack comparable switch technology. This allows NVIDIA to effectively abstract multiple GPUs into a unified computational entity, providing a distinct advantage for complex model inference and training.

To meet escalating interconnect demands, NVIDIA is collaborating with partners to develop larger-scale cluster solutions, such as the NVL288. These efforts aim to address the growing requirements of large-scale inference and training tasks.

As the market evolves, companies may increasingly invest in developing their own solutions. Under normal circumstances, this trend would benefit the industry by fostering competition and innovation. However, geopolitical factors, particularly US-China relations, are reshaping the competitive landscape. NVIDIA, for example, not only controls its proprietary technologies but also holds key resources, maintaining a near-monopolistic position. This dynamic pressures companies to independently develop their own technologies to remain competitive in the face of such concentrated market power.

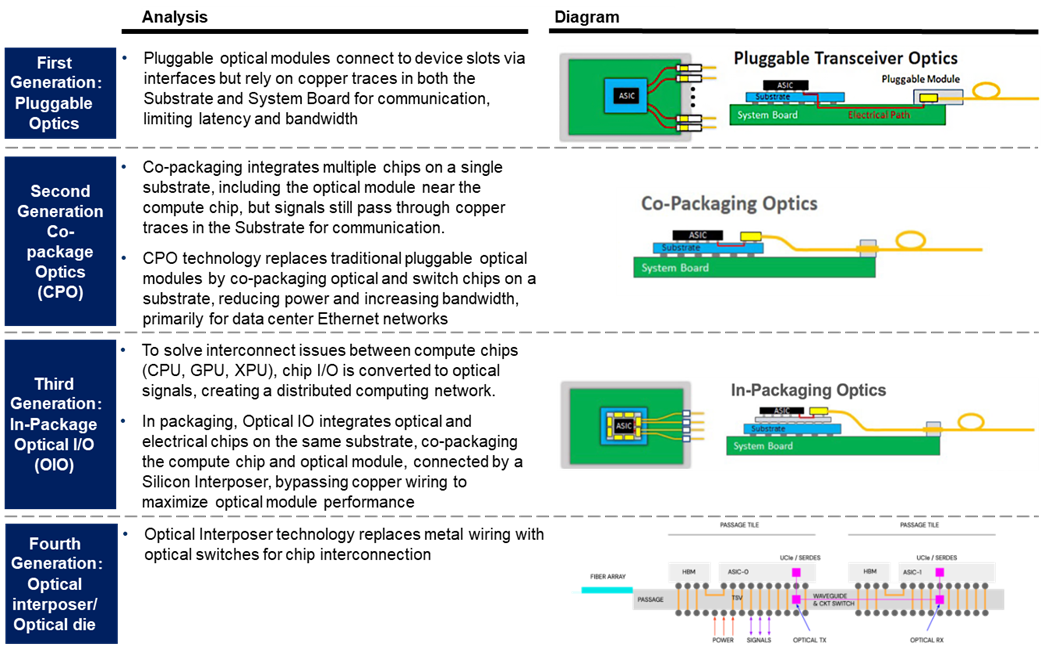

CPO Technology Overview

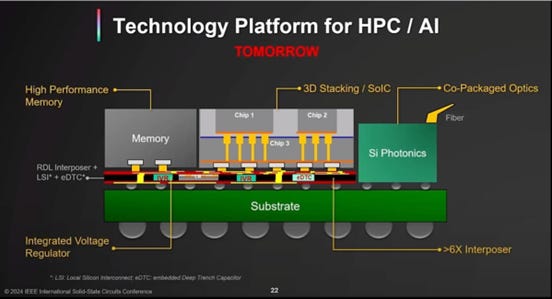

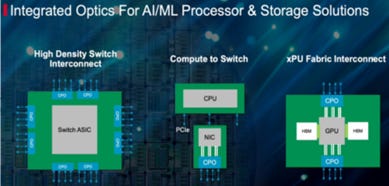

CPO optical module packaging technology is transitioning from traditional pluggable optical modules to integrated optoelectronic packaging. While pluggable optical modules remain the current mainstream approach, second-generation technologies are moving towards integrating optoelectronic modules directly onto the motherboard, placing them closer to the optical chips. Looking ahead, third-generation technologies aim to fully integrate optical interfaces with electronic chips within the same package, paving the way for a fully unified optoelectronic packaging solution.

Figure6: CPO Technology Iteration Process

Compared to traditional DSP (Digital Signal Processor) optical modules, CPO (Co-Packaged Optics) modules deliver notable advantages in power consumption, though their edge over LPO (Linear Pluggable Optics) technology remains less significant. Moving forward, CPO technology is expected to advance through higher-level packaging innovations, further reducing power consumption and improving performance. While emerging CPO solutions offer benefits in power efficiency and streamlined form factors, they still face substantial challenges in engineering execution and supply chain integration. As a result, existing manufacturers may favor LPO over CPO—particularly when DSP is not employed—and might not adopt CPO solutions universally.

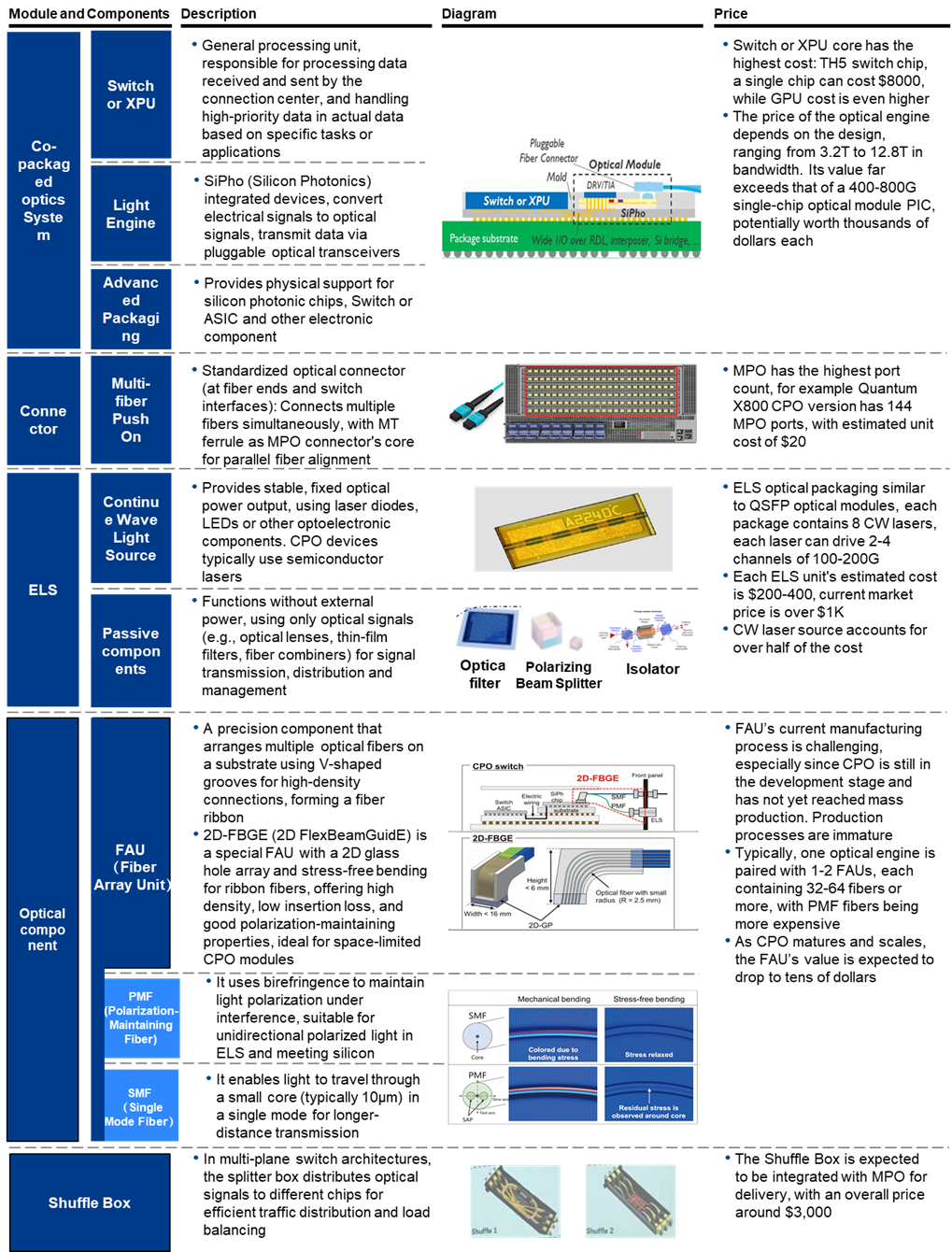

Value Breakdown of Core Components in CPO Packaging

Figure7: Value of Core Components in CPO Packaging

Switch and XPU

In the push for CPO (Co-Packaged Optics), the most critical components are undoubtedly the Switch and the XPU. The Switch serves as the core element, handling high-speed data transmission and processing. However, the Optical Engine likely accounts for the largest cost share. Leveraging silicon photonics technology, each Optical Engine is priced at approximately $500–$1,000. With bandwidths typically ranging from 3.2T to 12.8T, a single CPO design may require six, eight, or even twelve to sixteen Optical Engines, driving significant demand for these components.

MPO and External Laser Sources (ELS)

The second most significant but lower-cost components are MPOs (Multi-Fiber Push-On connectors), which serve as integrated interface modules on panels, essentially acting as connectors. MPOs are in high demand yet relatively inexpensive, typically costing between $10 and $20 each.

External Laser Sources (ELS) are another cost-effective component. Due to the susceptibility of lasers to damage, integrating them within the CPO could render the entire chip unusable in the event of failure. Additionally, managing laser heat dissipation presents significant challenges, further reinforcing the preference for external laser sources. These external sources function as optical modules that include only the transmitter (TX) without receiver (RX) capabilities. To drive more channels, the number of TX modules is typically doubled, requiring configurations such as eight or sixteen units.

Fiber Attachment Unit (FAU)

FAUs (Fiber Array Units) are critical components of optical modules, characterized by complex manufacturing processes that currently depend heavily on manual alignment. This dependency limits both production yields and capacity. At present, FAUs are priced at approximately $2,000 each, reflecting the intricacy of their production and low yield rates.

To scale production and lower costs, automation in FAU manufacturing is essential. However, specialized automated production lines are not yet available, and the automation of FAU production remains in the research and development phase.

Prospects for Large-Scale CPO Adoption

In the long term, the widespread adoption of CPO (Co-Packaged Optics) will hinge less on its advantages in power efficiency or cost savings and more on whether traditional optical modules can continue evolving to support higher speeds. In the short term, adopting LPO (Linear Pluggable Optics) solutions provides benefits such as energy efficiency and simplified maintenance, helping CSP (Cloud Service Provider) vendors avoid over-reliance on major players like NVIDIA and Broadcom.

According to TSMC's CPO roadmap, the primary products slated for mass production in 2025 are pluggable 1.6T optical modules. By 2026, CPOs will begin deployment on the switch side, with TSMC focusing on integrating Optical Engines with switch chips, while companies like Tianfu Communications will supply the Optical Engines. In the short term, LPO remains more energy-efficient and easier to manage, offering CSP vendors a viable alternative that mitigates dependency on dominant companies—a scenario CSP vendors strongly prefer to avoid.

Large-scale adoption of CPO technology in the long run will likely occur only when traditional optical modules reach their limits and can no longer scale to higher speeds. For TSMC, the greatest opportunities are expected to emerge post-2027, particularly with GPU All-Optical Interconnects (OIO), an area currently dominated by NVIDIA. Unlike CPO, OIO is less likely to impose further constraints on CSP vendors' growth. Additionally, in the OIO era, TSMC could play a pivotal role by offering customized solutions or standardized interfaces, enabling mid-sized manufacturers like XPU to design related products and expand their market presence.

Figure8: TSMC's CPO roadmap

CPO Company Performance Flexibility

Industry research predicts that next year, CPO switch shipments will reach approximately 600 units, including 300 test-type CPO switches each for InfiniBand (IB) and Spectrum, primarily for key clients like NVIDIA and Microsoft. This year, Spectrum shipped 30,000 units, with expectations to exceed 30,000 units in 2025, resulting in a CPO switch market penetration below 1%. By 2026, shipments are projected to increase tenfold to around 6,000 units, and by 2027, up to 24,000 units.

Based on the value of individual switches and their components, combined with a 20% net profit margin, the incremental profits for relevant manufacturers in 2027 are estimated as follows:

TFC: Approximately $157 million

Taichen Lighting: Approximately $6 million

Considering the cannibalization of Pluggable Optical Engine business, actual incremental profits may even be lower. Additionally, major clients like Meta and Microsoft prefer traditional pluggable multi-module solutions due to their more open and stable ecosystems. Even as Microsoft gradually adopts CPO solutions, progress remains slow, indicating that the reliability of current CPO solutions needs improvement.

Currently, the optical module industry is led by Chinese players such as InnoLight and Eoptolink, with supply shortages and geopolitical issues causing some orders to overflow to companies like AAOI. As demand for 1.6T and 800G optical modules increases, AAOI’s marginal profits are significantly rising. Lumentum is also expanding into optical chips and modules, potentially becoming a major beneficiary. Domestic optical module manufacturers are not expected to be significantly impacted in their 2025 performance but should monitor international policy changes, such as tariff adjustments, that could affect the market.

Overview of OIO Technology

OIO leverages silicon photonics and miniature optoelectronic devices by integrating micro-lasers, modulators, waveguides, and photodetectors. This integration overcomes the RC delay and crosstalk issues of traditional copper interconnects, achieving ultra-high bandwidth density and data transmission between GPUs, XPUs, ASICs, and other computing chips. Compared to CPO, OIO boasts a newer ecosystem with stronger control from GPU manufacturers, making it easier to implement commercially.

Figure9:Broadcom's OIO Technology Planning

Figure10:TSMC's OIO Technology Planning

Figure11: Nvidia's OIO-based Large Cluster Technology Planning

Advantages and Challenges of OIO Technology

Keep reading with a 7-day free trial

Subscribe to FundamentalBottom to keep reading this post and get 7 days of free access to the full post archives.