Tesla's recent technological advancements underscore its commitment to innovation in the automotive and artificial intelligence sectors. At the October 10, 2024, Robotaxi Day event, CEO Elon Musk unveiled the "Cybercab," a prototype robotaxi designed to operate autonomously without a steering wheel, targeting production by 2027. This ambitious project aims to offer a cost-effective autonomous transportation solution, with a projected price under $30,000. Later in its Q3 2024 earnings call, Tesla reported a 9% increase in earnings, surpassing analyst expectations. Moreoever, the company highlighted significant progress in its Full Self-Driving (FSD) technology, contributing to improved financial performance. Additionally, Tesla announced plans to commence production of more affordable vehicle models in early 2025, further expanding its market reach.

Following these developments, Tesla's stock experienced notable fluctuations. After the Robotaxi Day event, shares fell nearly 9%, reflecting investor concerns over the feasibility and regulatory challenges of the autonomous vehicle initiative. The positive earnings report led to a subsequent surge, with the stock rising approximately 12% in after-hours trading.

To truly understand Tesla's core value proposition, it's essential to look beyond Q3 earnings and upcoming affordable models, and focus on the company's trajectory in autonomous driving technology. Central to this is Tesla's application of scaling laws, which have been pivotal in the success of companies like OpenAI and Nvidia. By leveraging scaling laws, Tesla has strong potential to continuously enhance its AI capabilities, potentially leading to significant advancements in autonomous driving and solidifying its position as a leader in the automotive, AI and robotics industry.

What is Tesla’s Scaling Law?

The core concept of the Scaling Law posits that an increase in computational resources directly enhances model performance. In large language models, performance is typically assessed through metrics like loss, such as next token prediction loss. However, recent findings suggest that merely relying on a decrease in loss is insufficient to effectively measure model performance in complex tasks, such as mathematical reasoning or code generation. The autonomous driving sector faces similar challenges. Despite the lack of detailed data from Tesla, we can chart performance based on publicly available information, where the Y-axis represents miles per critical interventions, indicating the frequency of human intervention, while the X-axis reflects the amount of computational resources utilized. Tesla's computational environment is complex, encompassing both cloud computing and edge computing. Currently, it employs the Hardware 4.0 platform, with an upgrade to 5.0 anticipated for the next one to two years, thus constraining the performance of its autonomous driving capabilities to the existing hardware. These factors collectively influence the evaluation standards and future development of autonomous driving technology.

Figure 1. Scaling of Tesla’s FSD performance v.s. computing power. We normalize the computing power to the # of H100.

Tesla's Full Self-Driving (FSD) version 3.0 utilizes two first-generation autonomous driving chips, each offering 70T of computational power. However, because these two chips serve as backups to one another, the actual usable computational power is limited to 70T. The design of this generation of chips is relatively early, and their inference capabilities are constrained by memory bottlenecks.

In the Hardware 4.0 version, Tesla has upgraded to the FSD2 chip, which boasts over 100T of computational power, but still falls short of 200T, resulting in performance that is inferior to Nvidia's Orin. The current computational configuration has improved edge computing capabilities, but limitations remain. Looking ahead, Tesla anticipates that Hardware 5.0 will achieve 1000T-2000T of computational power. Tesla is continuously increasing its computational resources, particularly with the introduction of H100 GPUs, which are crucial for model training. During the development of the V11 model, Tesla utilized a limited number of H100 , with this model‘s PnR module being a small rule-based classification model that requires less computational power for training.

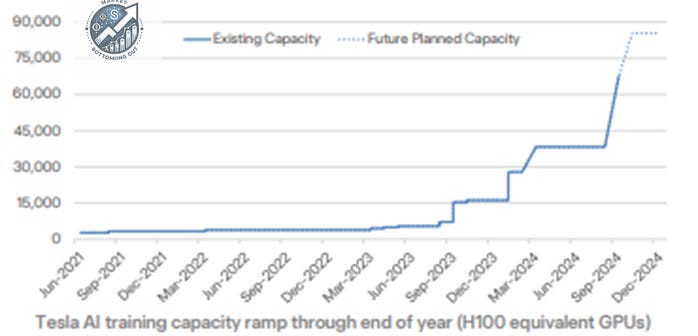

Starting from the V12 version, Tesla has shifted towards the development of end-to-end models. The training for V12 utilized approximately 5,000 H100 GPU, while V12.5 increased to tens of thousands, with about 20,000 in use. The V13 model is expected to be released by the end of the year, at which point the number of H100 may increase by 50,000, with overall computational power potentially approaching 100,000.

Figure 2. Scaling of Tesla’s computing power.

Looking to the future, Tesla plans to further increase its computational power next year. There are rumors that Tesla may order 100,000 H200 , along with hundreds of thousands of GB200 . Although the exact numbers are still uncertain, they are expected to fall within this range. Each B card has computational power equivalent to 2 to 3 H100 , and Tesla expects to reach a computational level of one million H100 next year (excluding XAI). This series of computational enhancements and resource allocations responds to Tesla's ambition to match its autonomous driving technology with human performance by 2024.

Collaboration Between XAI and Tesla

Tesla's integration with XAI for next year is still uncertain, as the current models have not yet achieved this collaboration. From a modeling perspective, Tesla is making significant progress, particularly between versions 12.4 and 12.5, improving its internal labeling system and several functions to gradually integrate with multimodal large models. These preparatory efforts aim to lay the groundwork for future integration with XAI's multimodal large models, suggesting that progress may be made in the coming year or the year after.

Currently, Tesla and XAI operate as two independent teams. XAI initially worked within Tesla but is expected to establish an independent office soon. In the early stages, Tesla's infrastructure, hardware, and algorithm teams collaborated extensively with XAI, but as the XAI team has gradually matured, the training process has become an independent effort. Overall, Tesla's model training and XAI's multimodal training remain mutually independent, though there is regular discussion.

Figure 3. xAI’s colossal data center, the largest single cluster of GPUs. Source: Patrick Kennedy

Internal Resource Allocation Patterns for Computational Clusters at Tesla

We believe Tesla's robotics and autonomous driving teams conduct training independently, although there are some shared training elements at the foundational level. After reaching a certain training threshold, specific specialized components undergo more in-depth training. Currently, the FSD team and the Optimus team share a GPU cluster, while XAI has its own independent cluster. The organizational structure between teams remains relatively vertical, ensuring specialized work divisions.

It is challenging for XAI and Tesla's FSD to utilize each other's idle computational resources during training. The FSD and Optimus teams at Tesla can switch between each other relatively easily, but the technical platforms of XAI and Tesla differ, making transitions more complicated. The large models from both sides have yet to be integrated due to current hardware limitations. The existing Hardware 4.0 version has over 100T of computational power, which is insufficient to effectively run XAI's large models. Tesla must wait for the release of Hardware 5.0, which will reach 1000T or higher, before it can genuinely integrate XAI's large models.

Tesla's Aspiration to Achieve Average Human Driving Levels Next Year

Although FSD13 has not yet been released, the Tesla team believe FSD performance could significantly surpass human capabilities soon, even equating it with that of Waymo. Human drivers experience a major accident approximately every 20,000 to 30,000 miles (akin to requiring external intervention), while Waymo's vehicles may only have such incidents every 100,000 miles. During the Q3 earnings call, Tesla indicated that human drivers face major accidents roughly every 70,000 miles, while Tesla's FSD experiences one approximately every 700,000 miles. Although test results from simulation environments cannot be entirely equated with real-world scenarios, they express confidence in achieving human-level performance next year.

However, Tesla's criteria for evaluating intervention in its autonomous driving system are somewhat ambiguous. Unlike Waymo, which has a professional fleet and defined intervention protocols, Tesla's situation depends largely on individual driver habits, where many experienced drivers may intervene based on their own driving patterns, which are not necessarily correct. Thus, Tesla lacks a robust standard when assessing the "major interventions per thousand miles" metric, complicating the measurement of intervention data. A truly reliable evaluation must await data from robotaxis.

Tesla possesses relevant data to support its assessment of the safety of its autonomous driving system. Each year, Tesla must submit accident data to the U.S. Department of Motor Vehicles (DMV), calculating the figures by dividing total mileage by the total number of major damages or accidents. This enables Tesla to report its FSD (Full Self-Driving) performance regarding accident rates. According to publicly available reports, FSD's accident rate is significantly lower than that of human driving, highlighting its safety advantages. Additionally, Tesla's insurance policies reflect this, as FSD-equipped vehicle owners enjoy lower insurance premiums.

Figure 4. Tesla’s Cybercap (robotaxi) and Robovan. Concept drawing from Tesla website

Elon Musk has claimed that FSD's performance is approaching human driving levels, and may even surpass it next year, but quantifying this superiority remains a challenge. Furthermore, the public's acceptance of this reality also poses challenges. Tesla may seek support from Trump to improve related regulations. In areas like Texas, compared to California, FSD's Robotaxi operations face fewer regulatory hurdles.

Mile per Critical intervention serves as an example where a driver feels the need to brake or take control. Currently, when using FSD, drivers are not required to keep their hands on the wheel; they only need to maintain their gaze on the road. Internally, Tesla has data regarding critical interventions, but this information has not been fully disclosed.

Additionally, Tesla has a considerable online fan base and followers, such as websites like FSD Tracker, where users can report their critical interventions. However, because this data relies on personal reports, significant variations exist. Next year, as Tesla begins promoting its robotaxi pilot, it is expected that comparisons with companies like Waymo will become possible, allowing for clearer comparisons based on actual data.

Figure 5. Cumulative FSD miles. According to the Q3 2024 financial report, the cumulative mileage of FSD has reached 2 billion miles, with an additional accumulation of over 100 million miles per month.

Commercial Progress of Tesla bot

We personally believe that the commercialization of Robotaxis will progress faster. From a technological perspective, we hope to see a clear scaling law on robotics. In the case of Robotaxis, Waymo has fully validated the feasibility of this business model in San Francisco. As we mentioned earlier, their operations in San Francisco have been positive, with several hundred vehicles currently in operation.

Figure 6. Tesla bot. Concept drawing from Tesla website

Additionally, Tesla has also demonstrated the feasibility of scaling effects. From their initial V12 to versions 12.4 and 12.5, along with the integration of H100 units, Tesla's progress highlights several key points that can be used to predict future expansions.

In contrast, the biggest challenge facing robotics technology today is the lack of data, which has hindered the visibility of a clear scaling effect. Through discussions with some scholars focused on robotics research, we've learned that the scaling law for robotics has yet to be validated. Tesla has adopted a "remote operation" approach in their robot development. During the FSD demonstration on October 10, the interaction between the robot and humans, including bartending, was widely believed conducted by remote personnel. Although this method allows for data collection, its efficiency is not particularly high.

Moreover, NVIDIA has established a laboratory specifically for researching robots in simulated environments, but no significant scaling law has been observed there either. Therefore, according to Tesla's plans, they intend to begin deploying robots in factories next year. However, we believe they will initially rely on methods similar to remote operation, which may require at least a year to collect sufficient data to better assess the scaling effect of robotics.

Overall, we feel that the practical implementation of robotics technology may lag behind that of Robotaxis.

Competitors to Tesla in the Robotics Field

Boston Dynamics and traditional robotic hardware companies are increasingly resembling each other in terms of technical routes and development directions. Boston Dynamics, which used to focus on various drive systems, is now also evolving towards more comprehensive robotic bodies. However, advancements in robotics technology require not only enhancements in hardware but also robust artificial intelligence support. For a company focused on hardware, integrating the AI component can be relatively challenging and necessitates significant investment.

In the U.S., while there are some startups like Physical Intelligence, these companies still have considerable gaps compared to Tesla. The only variable may be OpenAI, as their early research direction was also in robotics, and they have invested in several related companies. Thus, it is worth observing whether OpenAI will take more significant action in the robotics field.

Furthermore, Google possesses a large robotics team, but recent internal turmoil has hampered their progress in this area. Overall, it seems that Tesla does not face particularly strong competitors in the robotics domain.

Our speculation on Tesla's future scaling law trajectory, in comparison with OpenAI.

Figure 7. Evolution of scaling law, Tesla v.s. OpenAI. Currently Tesla’s FSD is still at GPT2 to GPT3 level, which means significant room for improvement

Keep reading with a 7-day free trial

Subscribe to FundamentalBottom to keep reading this post and get 7 days of free access to the full post archives.